Projects

Federated Learning for Fault Diagnosis

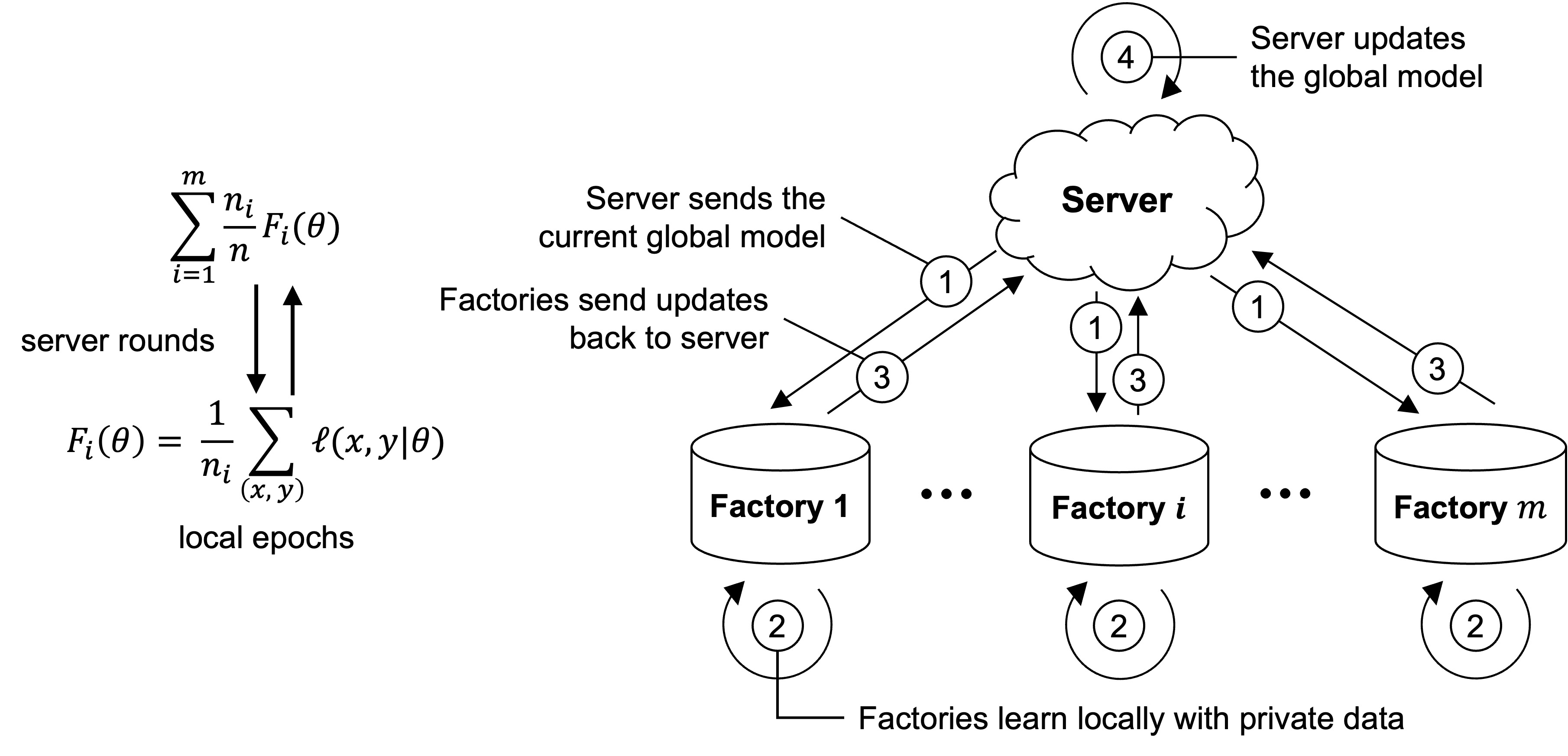

Federated learning (FL) is a distributed machine learning paradigm that enables collaborative model training without sharing raw data. In manufacturing, FL can be used to train fault diagnosis models across multiple factories or production lines without compromising data privacy. However, FL faces challenges such as data heterogeneity and communication overhead. My research focuses on developing FL algorithms that can handle these challenges in manufacturing settings.

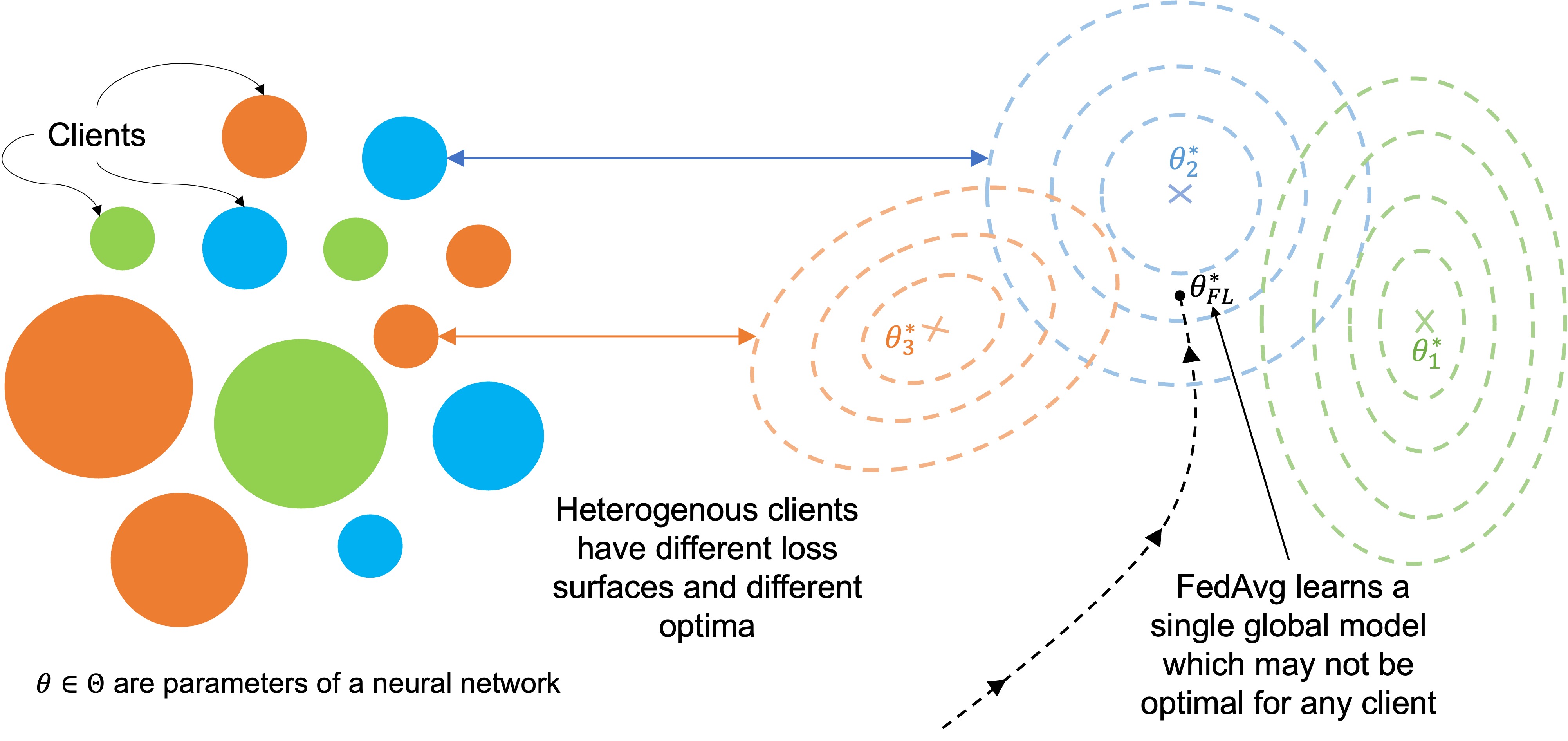

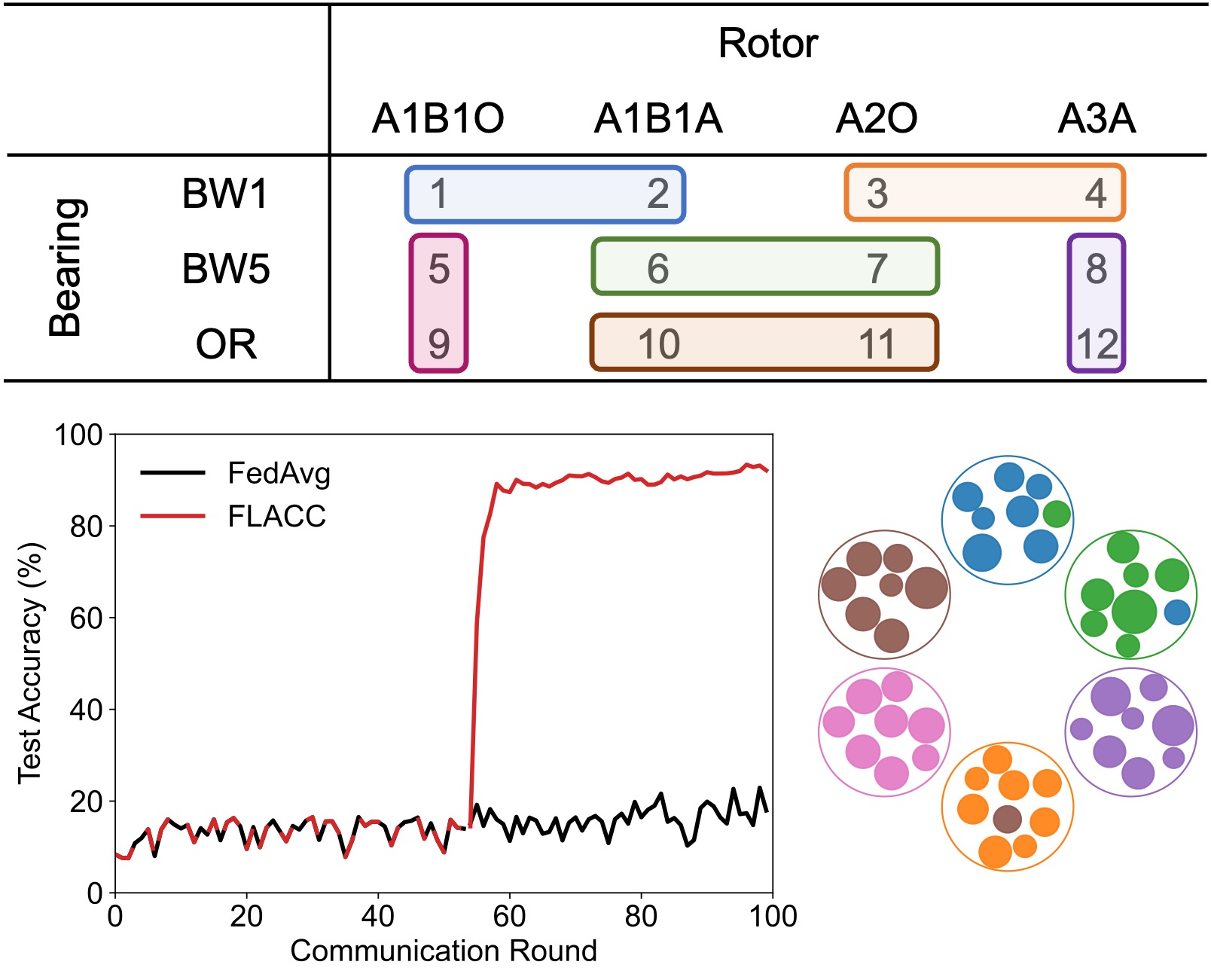

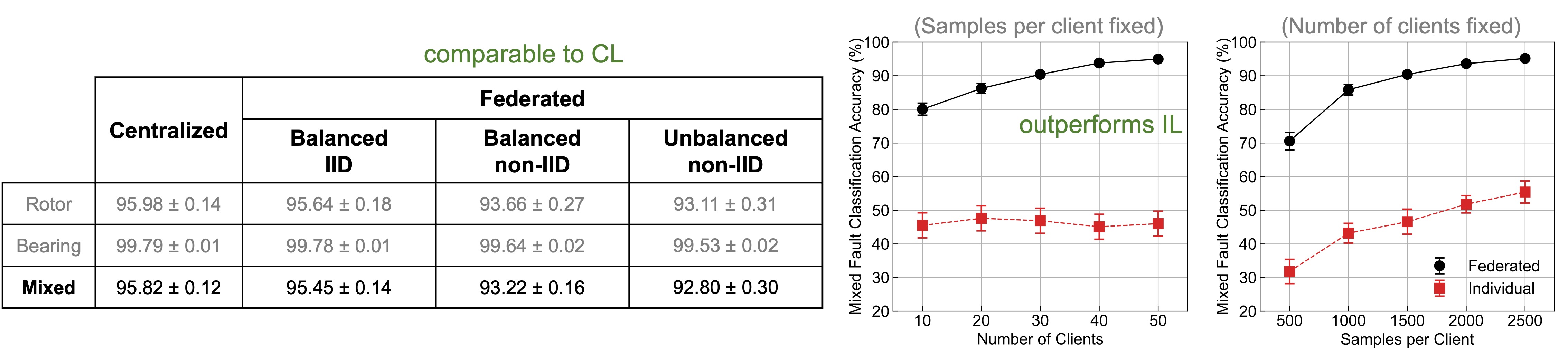

In one project, we developed a clustered FL approach to address data heterogeneity in fault diagnosis. The approach clusters clients based on their data distributions and trains separate models for each cluster. This approach outperforms standard FL in scenarios with heterogeneous data distributions.

In another project, we investigated the impact of different types of faults on FL performance. We found that FL is more robust to certain types of faults than others, and we developed strategies to mitigate the impact of these faults.

Gaussian Process Regression for Surface Interpolation

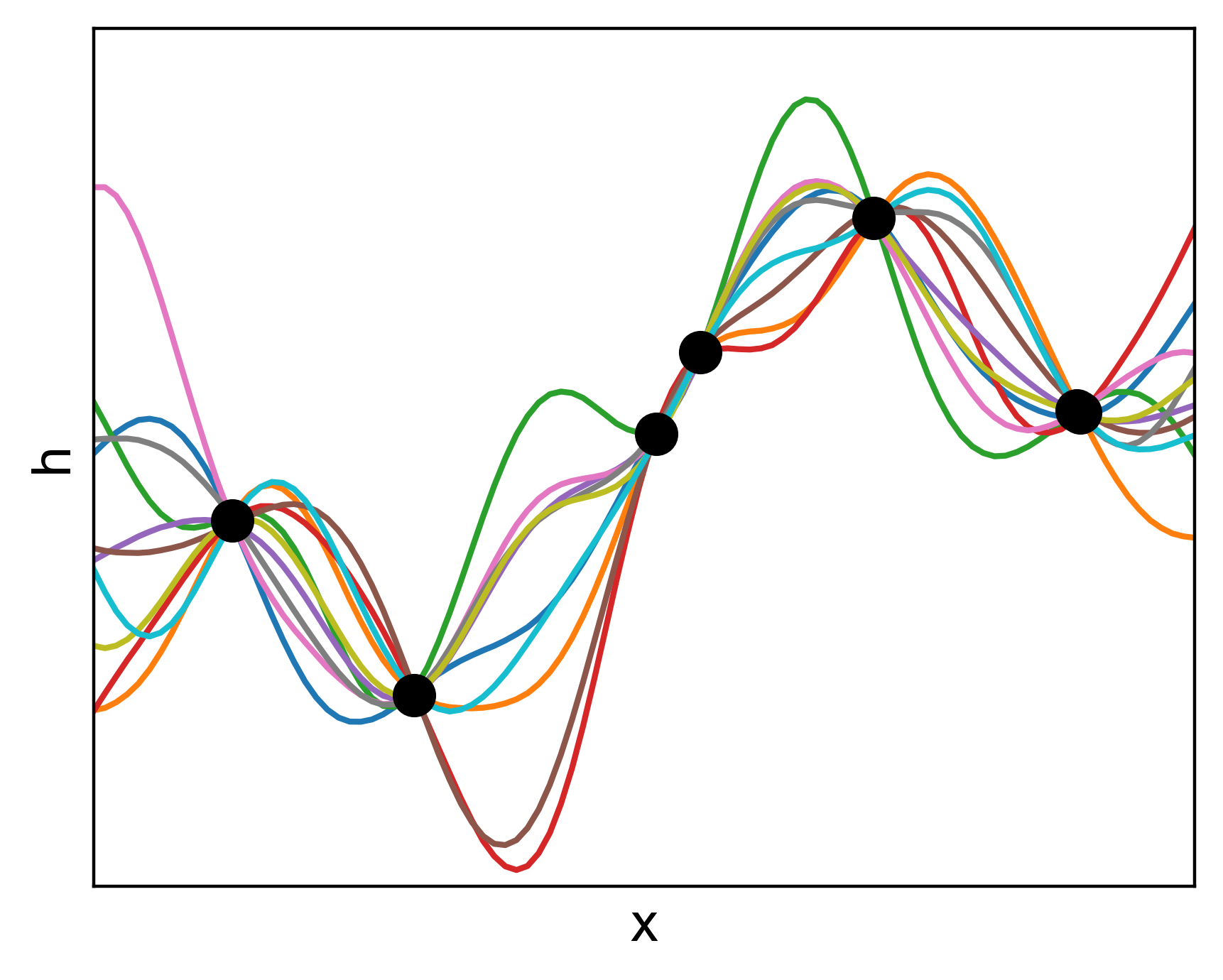

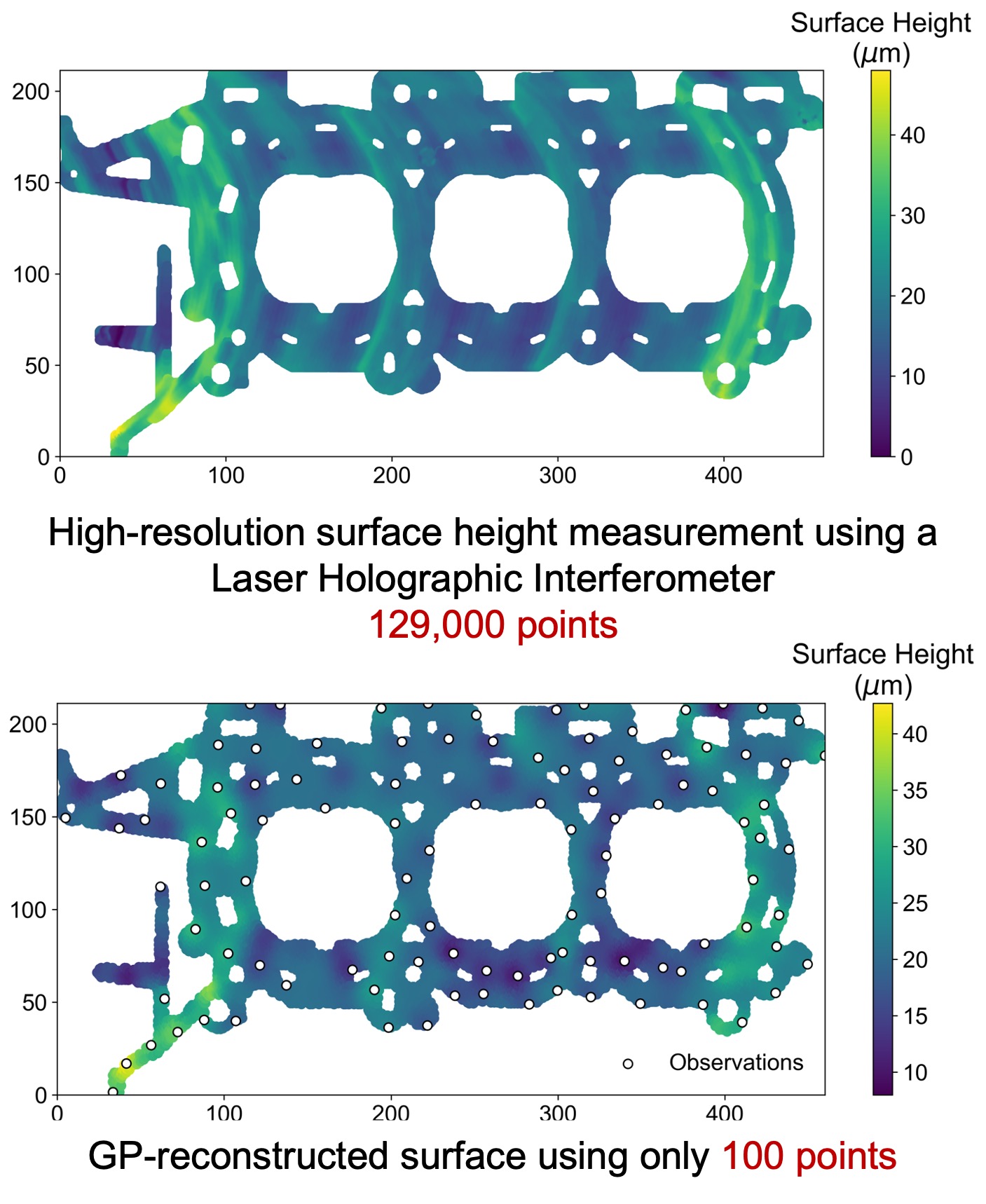

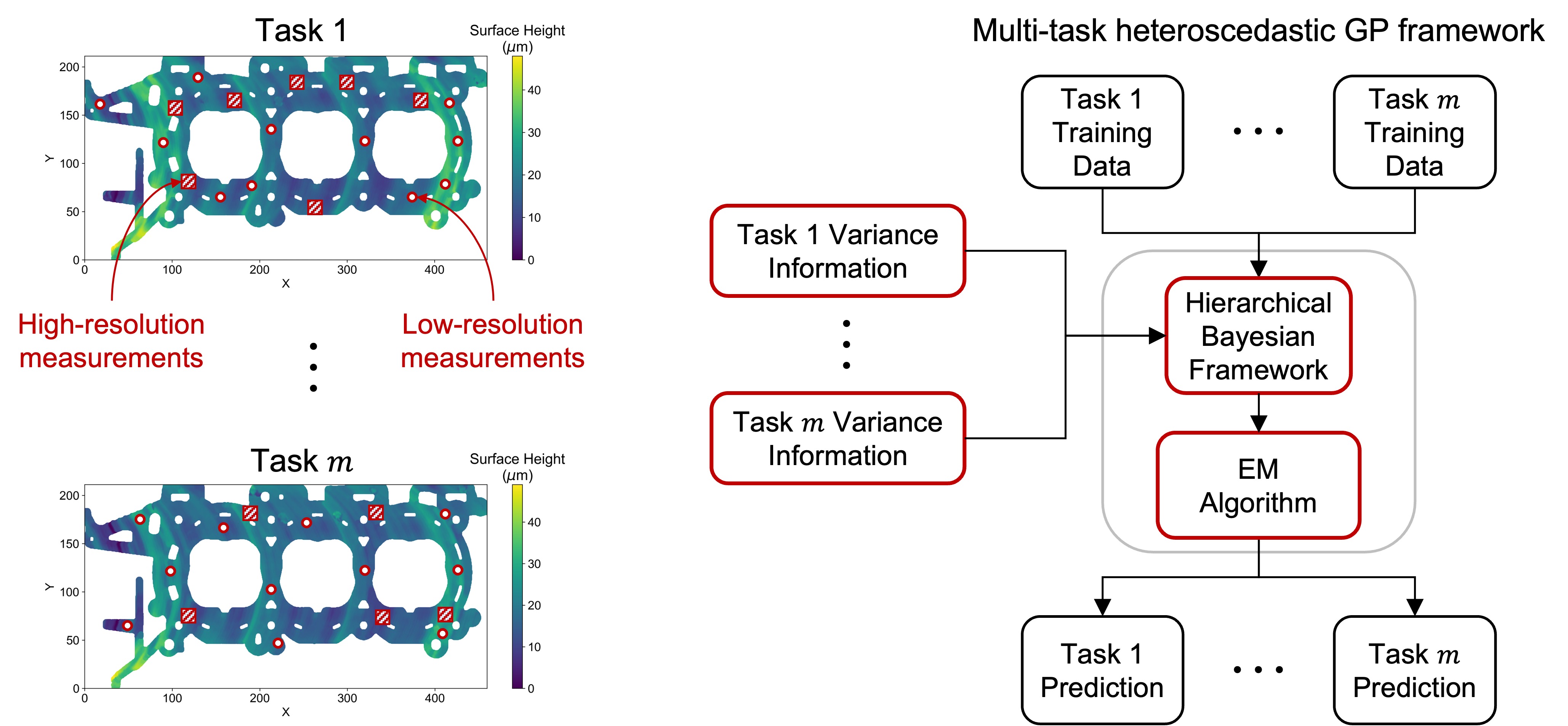

Gaussian Process (GP) regression is a powerful non-parametric Bayesian approach for modeling complex functions. In manufacturing, GP regression can be used for surface interpolation, which is essential for quality control and process optimization. My research focuses on developing GP-based methods for surface interpolation in manufacturing applications.

In one project, we developed a filtered kriging approach for surface interpolation. The approach combines GP regression with filtering techniques to handle noise and outliers in surface measurements. This approach outperforms standard GP regression in scenarios with noisy data.

In another project, we investigated the use of GP regression for multi-task learning in surface interpolation. The approach leverages correlations between multiple surfaces to improve interpolation accuracy. This approach is particularly useful in scenarios where multiple related surfaces need to be interpolated.

Deep Learning for Medical Image Segmentation

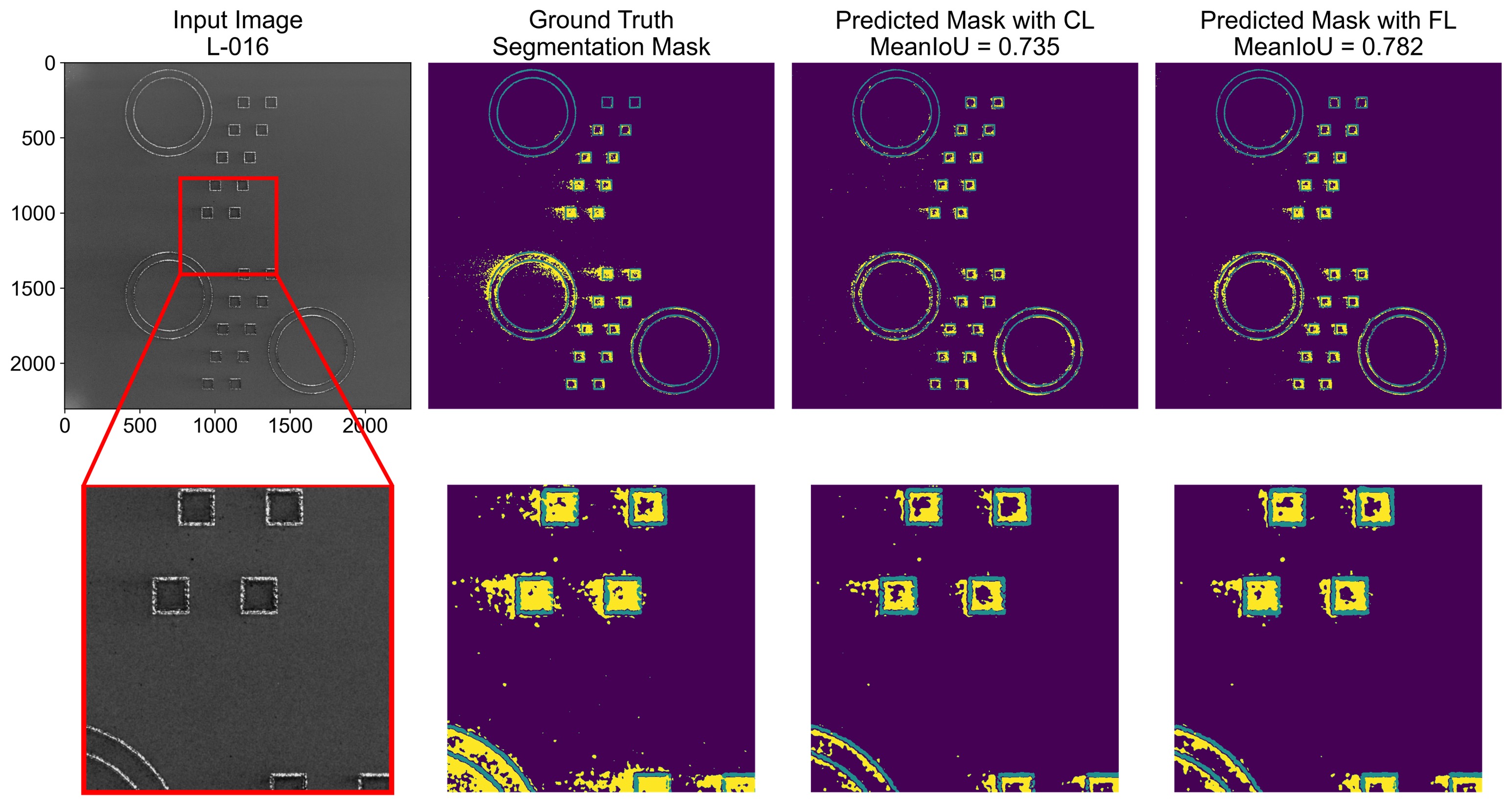

Deep learning has revolutionized medical image segmentation, enabling accurate and efficient delineation of anatomical structures and pathologies. My research focuses on developing deep learning models for medical image segmentation, with a particular emphasis on improving segmentation accuracy and robustness.

In one project, we developed a U-Net-based approach for segmenting brain tumors in MRI images. The approach incorporates attention mechanisms to focus on relevant features and improve segmentation accuracy. This approach outperforms standard U-Net in scenarios with complex tumor shapes.

In another project, we investigated the use of transfer learning for medical image segmentation. The approach leverages pre-trained models to improve segmentation accuracy in scenarios with limited training data. This approach is particularly useful in medical imaging, where annotated data is often scarce.